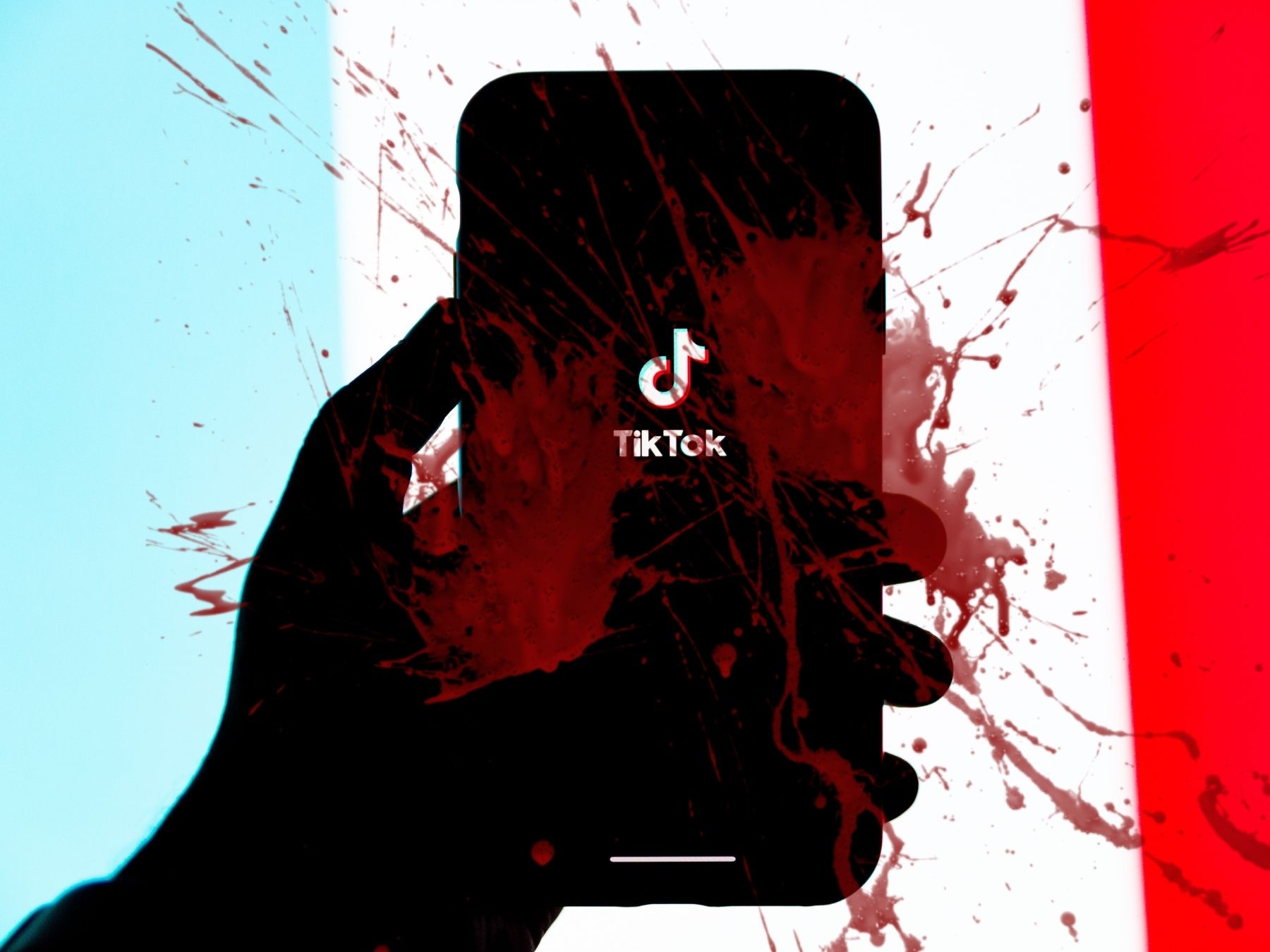

What started as a creative platform full of entertaining dances, innocent challenges, and funny videos has took a wrong turn as it claimed the lives of innocent children and teenagers.

The rise in popularity of TikTok has led to a big increase of unsafe and life-threatening challenges which include swallowing strange objects, putting dangerous chemicals on their skin, or experimenting with foreign items into body parts. Many of these challenges are viewed by millions of young people, who then feel pressured to try them out themselves and be part of a “community”.

Misinformation is spreading faster than wildfire, both devastating and dangerous.

It’s nearly impossible to round-up a list of TikTok’s unmoderated challenges and beauty or health advice because the content keeps multiplying to satisfy its users' short attention spans and the need for new things.

Slugging for example, which currently has 385M+ views, advises people to sleep with a thick layer of petroleum jelly on their faces for hydration. However dermatologists say that doing this could actually induce breakouts and leave the skin in a more awful state to begin with.

One of the worst in the platform are the “Benadryl challenge” where users are encouraged to take large amounts of the medicine to induce hallucinations and the “Blackout challenge” which promotes a form of self-strangulation by challenging users to see how long they can go holding their breath - both of which have already taken innocent lives due to misinformation.

But who's to blame? The platform, creators, or both?

Accountability goes both ways here.

Stronger community management and strict content moderation needs to be in place in every social media platform. On the other side of the coin, creators must know their responsibility to curb the spread of misinformation.

Behind every view, like, or comment is actual human life. Not just a vanity metric.

In response to this, up until recently, TikTok has started moderating certain content and keywords on their platform to limit the spread of dangerous, disturbing, or even fabricated information. They do this by showing a dedicated landing page within the app that provides a resource to identify harmful content when a banned keyword is searched. They promote a 4-step process; Stop, Think, Decide, and Act which encourages users to report suspicious content rather than liking or sharing it.

-x-